As her new book of pornographic AI nudes is published, Arvida Byström talks about parasocial relationships, sex work, and whether or not it matters if images are “real”

Beneath the saccharine selfies and images of fruit in lingerie found on Arvida Byström’s Instagram, there is much more than meets the eye. Having first gained notoriety on Tumblr in the 2010s, today Byström is a photographer whose pastel-shaded practice boldly – and often amusingly – examines womanhood in the murky context of the internet today. Straddling the worlds of performance art, satire, sex and technology, the past few years have seen the Stockholm-based artist delve into more extreme themes through what she describes as explorations of “digital women”. These have ranged from strange, digitally altered self-portraiture to her unsettling 2023 performance A Cybernetic Doll’s House, which saw the artist interacting with an AI sex doll called Harmony.

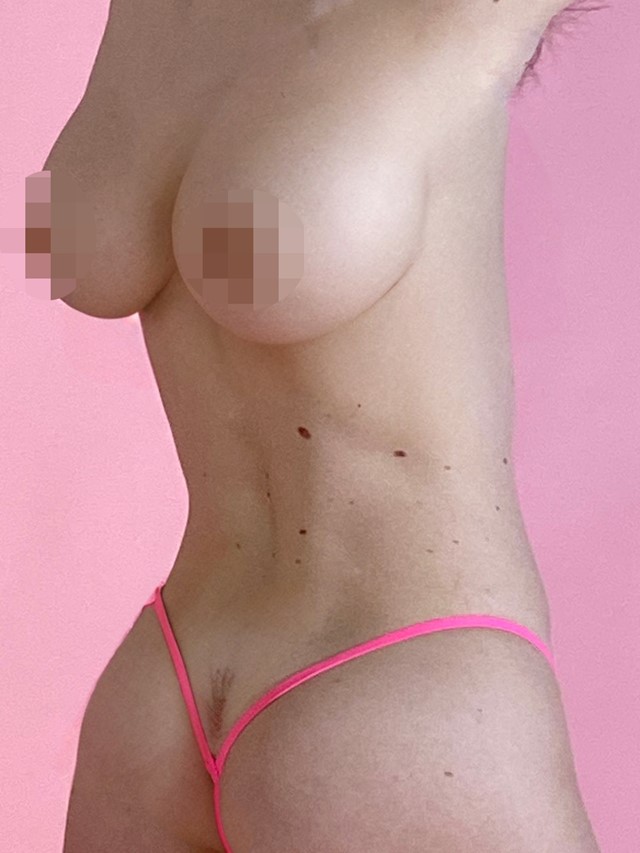

Although Byström often employs her body in her work, her online presence is more akin to an alter ego, or as she puts it, “a mythic humanoid in people’s phones”. Despite this protective layer of performance, her latest project sees her push her personal boundaries further than ever before. Arriving this week in the form of a book titled In The Clouds (published by Nuda), it brings together a series of pornographic self-portraits created using an ethically questionable AI tool called Undress App, where anyone can turn portraits into deepfake nudes. Using the app on herself, she then sold these images as if they were real on a porn subscription site called Sunroom, coupling them with AI sexting conversations trained to sound like her using text from past interviews.

Part performance art, part social experiment, the book collates realistic deepfakes and monstrous mistakes made by the AI – with breasts sprouting out of Byström’s back, for example – alongside thought-provoking texts by celebrity philosopher Slavoj Žižek, novelist Olivia Kan-Sperling and cultural critic CM Edenborg. Disturbing and darkly humorous, In The Clouds investigates the Pandora’s Box of AI, tackling questions of consent, who makes money from bodies online, and whether it matters or not if images are “real”.

Here, speaking in her own words, Byström tells the story of In The Clouds:

“Last summer, I started doing these AI collages of self-portraits using Outpaint. I’d take out a little part of the photo and then tell the AI what I want to pop up there. I played with photography merged with tools like this because a lot of the AI images have this Midjourney look, and that’s not really my style. I only use an iPhone camera because I think there’s something very [familiar] about it. Even though my work gets a little bit more fantastical, I think I want a hint of reality – like you can touch it.

“I was playing around with all these different things, then all of a sudden I saw a post on Twitter about a website called Undress App. It claimed to be able to undress anyone through AI. It felt very similar to what I was already doing to my photographs with Outpaint. I did a little test on Undress App and I put it on Twitter and it got a lot of likes. At the same time, this platform called Sunroom, which is like OnlyFans, got in touch with me. I just felt like the stars aligned telling me to sell these AI nudes of myself.

“I thought it was interesting to explore this parasocial relationship where people think they’ve been seeing the real me, nude, and been jerking off to that. I began questioning if there’s any difference because I am this magical digital creature that most people will probably never meet. My other images online are still altered, they’re photoshopped, they are staged. So in a way, this is as real as the real thing.

“On Sunroom, they have also been talking with an Arvida AI that I made with this company called Iris. They set up a lot of creative, fun ways to play online outside of the app world. We basically made AI based on my interviews and they also made it so it could talk about sex. I put some of the chats I’ve had with men on the platform in the book. A few people have been very sceptical, but there’s also been a very dedicated little base of people that have really liked Arvida AI. Plenty of people have definitely gotten off to her without any sort of suspicion.

“I think the AI deduced from my interviews that I was a person working with bodies. It’s hard to know exactly how it gathers information and from where, but it uses a lot of words like ‘raw, real, unfiltered, authentic’. I found it really funny because I have no interest in exploring authenticity in my work. I think I might be even on the other side of that – I work with the digital. I work with made-up women. Beyond being a wordplay on the iCloud, that’s how we came up with the full title of the book – Imagine being raw and real In The Clouds with Arvida Byström – because that was really part of her language.

“At times, [being part of the Sunroom platform] was really hard, honestly. I also want to try to find a [sensitive] way to talk about this, as I think sex work is very complicated. With OnlyFans, for example, it can be cool, funny and interesting, but I also saw how dangerous it can be. Sex work is also sort of like a service job, and when people pay for a service, often they feel very entitled to do really shitty things to people. That has been very scary and disheartening to see. I think the best way to get rid of that dangerous part of sex work is to make sure that the standard of living for people in our society is high, so they don’t end up in super dangerous situations for money.

“I work with self-portraiture, and this is the only moral way to play with an app like Undress – to use it on yourself. It’s obviously not good that people make these kinds of things without consent. The best outcome [from deepfake porn] is that nudes might not be so explosive anymore, because it can always be a fake. But of course, there’s a billion other outcomes.

“If we talk about AI more generally, you can’t build a car without a lot of hands helping in that process. The technology is trained on images that are created by porn workers and the labelling of different images is also made through incredibly low-wage labour. I truly think AI is like our common unconscious as a society – the money made from it cannot just go to a few billionaires. The only ethical way is to make sure that the money goes back to everyone.

“For me, putting the project in a book is a more controlled way to be able to tell the story. I don’t really know what’s going to happen on the Sunroom app once I reveal these are AI-generated images ... Overall, I think In The Clouds is a fun project in terms of discussing AI and bodies online, who makes money off bodies online, and how parasocial relationships work. That’s the fun part I played with and was trying to understand. I think I want people to laugh, to be a bit shocked and horrified – and also maybe jerk off too.”

In The Clouds by Arvida Byström is published by Nuda, and is out now.